As a presenter on our recent technology-focused web clinic, I had the pleasure of learning about an experiment devised by my colleague, Jon Powell, that illustrates why we must never assume that we test in a vacuum devoid of any external factors that can skew data in our tests (and even looking at external factors that we can create ourselves).

If you’d like to learn most about this experiment in its entirety, you can hear it firsthand from Jon on the web clinic replay. SPOILER ALERT: If you choose to keep reading, be warned that I am now giving away the ending.

According to the testing platform Jon was using, the aggregate results came up inconclusive. None of the treatments outperformed the control with any significance difference. However, what was interesting is the data indicated a pretty large difference in performance with a couple of the treatments.

According to the testing platform Jon was using, the aggregate results came up inconclusive. None of the treatments outperformed the control with any significance difference. However, what was interesting is the data indicated a pretty large difference in performance with a couple of the treatments.

So after reanalyzing the data and adjusting the test duration to exclude the results from when an unintended (by our researchers at least) promotional email had been sent out, Jon saw that each of the treatments significantly outperformed the control with conclusive validity.

In other words, if Jon had blindly trusted his testing tool, he would have missed a 31% gain. Even worse, this gain was at the beginning of a six-month-long testing-optimization cycle. If Jon had assumed he had learned something based on inaccurate data that he really hadn’t, this conclusion more than likely would have sent Jon down a path of optimizing under false findings and assumptions.

In other words, to create a simple pre-GPS era analogy, if you make a wrong turn at the beginning of a 600-mile road trip and keep heading in the wrong direction, you will be much farther off the mark than taking the wrong road when you’re just a mile away. However, in our cases with many businesses, wrong turns and mis-directions can cost from thousands to millions of dollars in lost time and revenue.

Worst of all, this email came from the Research Partner itself. As we run into many times, they unwittingly sabotaged their own tests. With the Internet being a dynamic place, it is next to impossible to avoid every external validity threat to your test, but at the very least we need to make sure that we are not introducing threats with internal campaigns to the same audience.

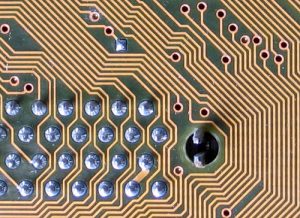

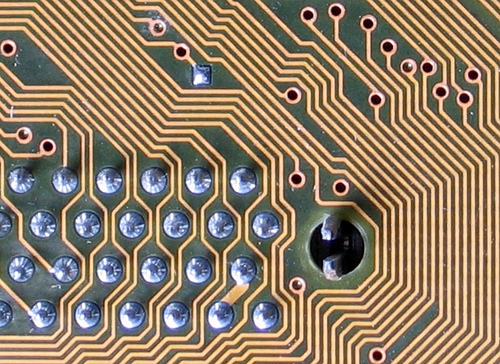

This is not to say we stop those campaigns, but just be aware of the potential effects on testing. That awareness, at least until computers become sentient beings, requires human involvement. Of course, that’s just one area where a little human curiosity is essential…

Do not let testing tools overshadow the human element of creativity.

Sure, many tools are now evolving to the point they will create “treatments” for you based on combinations, uploading content etc. But what this can create is a “perfect” sub-standard general design. These tools are limited to the inputs we give them so the optimization that can occur is constrained, where a human could take findings and radically change an entire process.

Begin by taking a step back, putting yourself in your customers’ shoes, and taking a human look at the big picture. Ask, “Is this even the proper overall design?” rather than taking the easy shortcut of testing a randomly generated combination of calls to action or headlines.

Multivariate testing (MVT) has its place. In fact, here at MarketingExperiments we use it frequently. But as with any tool, the result is only as good as the craftsman. So, when using MVT, make sure you have not ignored the big picture of what your users want by using the same sub-standard message presentation you’re trying to optimize in the first place as the base that you build your tests off of.

So how do we trust our tests? Here are steps for better setup.

- Sound Test Design – The test you are performing must represent the same environment where you are going to potentially apply the results. Many times we find people stretching the finding to different audiences, and then wondering why the results do not translate. For example, are you taking the lessons learned in email testing and applying them to your PPC ads? Well, they could each have different audiences that react in different ways.

- Research Question – Have you set a clear and specific objective for testing? Without establishing a clear objective, it is possible to get lost looking at a vast array of data points and trying to correlate them all. The research question also provides guidance on what items should be included in a test and what should be reserved for later.

- Proper Execution – Are you selecting the right test audience? Based on this audience, will you be able to apply the results to other aspects of your web communications? Beyond that, you must ensure you have enough of this audience to reach a statistically valid conclusion, i.e. really learn something not just think you learned something. To do that, you must be recording accurate measurements. Ensure you double check your metrics technology before launching a test (more on that in the checklist).

- Confidence – Establish a standard for your results to uphold. Simply, you are trying to arrive at a finding that you have seen replicated enough times that you can confidently say, “we have sufficient information to make a conclusion on the research question we sought to answer.” The amount of times you need to measure will be a decision based on the volatility of the experimental environment and other factors. At the end of the day, though, it will also boil down to a business decision to continue or move on. This is something that needs to be agreed to and developed in house. Just understand that while setting this mark low carries some risks, some processes with low traffic or time sensitivity necessitate that we move on with lower confidence levels at times.

At MarketingExperiments, we try to stick to a 95% statistical significance as much as possible. However, there are times where we have to accept a lower mark.

But remember, statistical significance from a piece of software cannot alert you to data that is inherently wrong or warn you that something else has influenced (and perhaps invalidated) a test, it only tells you that the results were unlikely to happen by chance. Omniture (interesting alert for segmented data) and Google Analytics (GA intelligence) have been dabbling in this area, but still require human interaction and do not cover all aspects.

So make sure that you perform your due diligence with tool setup, test design, and data analysis – because it is very easy to gain confidence in the wrong decision with bad data from a tool that says it is 95% confident. Again, it is so important for us to invest greatly in people along with tools. As Avinash Kaushik says, you should invest 10% in tools and 90% in people.

Technology options/features that can trip you up.

- Metrics calculation process – Know how conversions are calculated (for example, visits vs. absolute visitor vs. page views, etc.). Many tools allow you to change how metrics get calculated, so make sure you are looking or pulling data using the same measure or comparison items throughout the test. Also, realize that individual tools may calculate conversions slightly differently.

- Default validity confidence levels – Understand your testing tool’s default measure of confidence and make sure that it matches your own internal measures.

- Default summaries – One of the most dangerous items in testing is the summary or dashboard view. Most of the juicy test details are hidden so problems that might be occurring in the test are tough to spot. Jon’s experiment is a great example of this. Looking at more specific data (like day-to-day metrics) will give you a better health check of what is happening with the test.

- Uniform sample distribution assumption – Tools assume that the data we are going to receive within tests will be uniformly distributed. However, if you have run your own test you know that this is not always true. As mentioned earlier, testing software has started adding some intelligence tools to try to spot “interesting” data points, but in our experience not many people use these tools. Non-uniform distribution can drastically affect validity and needs to be monitored…which means you need to pay attention to data closely (not in aggregate).

For a five-point testing technology checklist, and to learn more about other technology blind posts and how to address them, view the replay of our latest webclinic.

Related Resources

Online Marketing Optimization Technology: We have ways of making technology talk, Mr. Bond

Optimizing Site Design: How to increase conversion by reducing the technology barrier

Nice reality-check. The human aspect can’t be underestimated in optimisations.

In fact, that’s the foundation we’ve built our web app, Plug in SEO, on. I believe in the success of guided optimisations rather than automated optimisation. There is still much room for growth in both areas when it comes to tech.

It’s certainly very interesting and exciting to see where online marketing is going aided by tech.