Does ethnicity in marketing images affect a campaign’s performance?

Besides being an important marketing question, it’s also an interesting social question.

The MECLABS research team asked this question because they needed to find the best performing imagery for the first step in the Home Delivery checkout process for a MECLABS Research Partner selling newspaper subscriptions.

The test they designed was simple enough:

Background: Home Delivery ZIP code entry page for a newspaper subscription.

Goal: To increase subscription rate.

Research Question: Which design will generate the highest rate of subscriptions per page visitor?

Test Design: A/B variable cluster split test

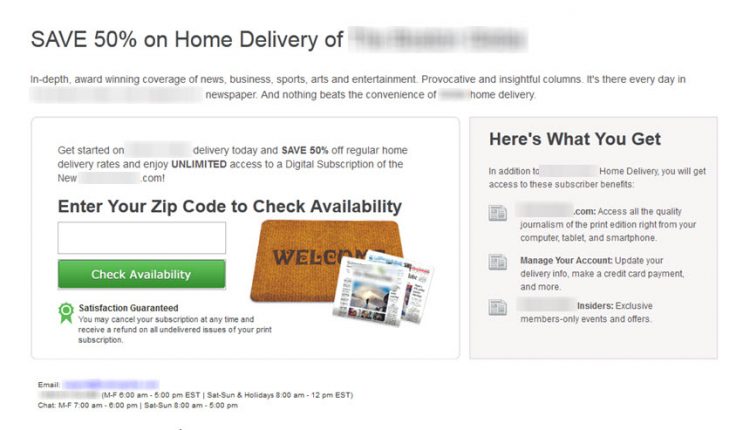

Control: Standard image of newspaper on welcome mat

Treatment 1: Stock image of African American man reading newspaper

Treatment 2: Stock image of older Caucasian couple reading newspaper

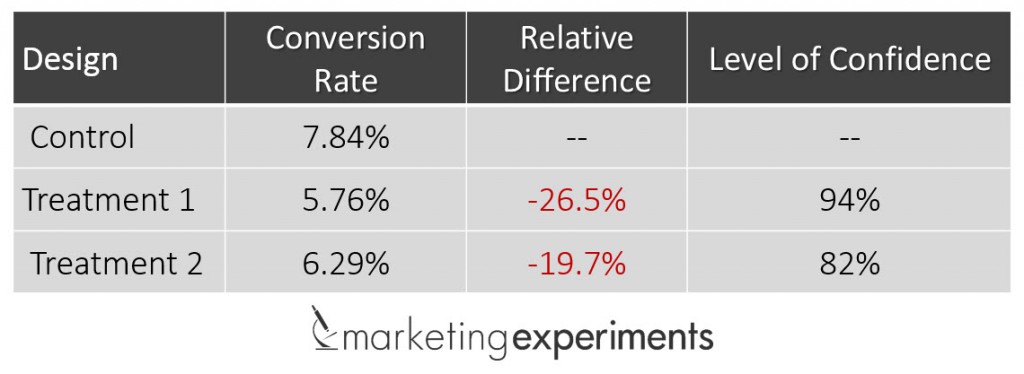

Results

Both treatments with images of people underperformed when compared with the control. However, there was no difference in performance when ethnicity of the people in the images was the only variable.

What you need to understand

Customers in this case did not respond differently to images of differing ethnicities.

Not only did we find that there was no difference in customer preference between the African American man and the older Caucasian couple, but we also found that the control page with the simple image outperformed both of the pages with images of people.

Unfortunately, we cannot definitively say whether the image was the deciding factor when we compare the two treatments to the control. There were other variables that changed (the headline and call-to-action layout, for instance) that made it difficult to determine what made the difference.

Certainly, the most dramatic change for all the treatments was the image. It would not be a stretch to assume that the images made most of the difference, but we won’t know for sure without further testing.

You may also like

Value Proposition: 4 key questions to help you slice through hype [More from the blogs]

Landing Page Optimization: Testing green marketing increases conversion 46% [More from the blogs]

Landing Page Optimization: Multi-product page increases revenue 70% [More from the blogs]

But did the survey show who converted on what image? If African-American men converted on Treatment 1 then that has a stronger correlation.

Hi Niklas,

If I understand what you’re asking, then no. The metrics we had running for this test were not granular enough to show the ethnicity of each individual visiting the page. So we were not able to measure the correlation between the ethnicity of the visitor with the ethnicity of the image. We were only able to measure the effect of the images on the aggregate customer.

Does that answer your question?

While I understand the need for this testing, I don’t get is why you changed multiple variables while doing the test. Would it not have been more accurate if you had A/B tested each element separately in order to fully understand the effect of the different images, headlines, copy had on conversion? I understand as well, the coarse data not having ethnicity measured, but I also noticed that you only had three images tested. Would it not have been better to have tested … say the three you did test, as well as a young female, or young couple? I think more testing of this is in order.

@shannon smith In theory, yes, it would have been better to test more images and limit the variation. In practice, however, we are always constrained by business objectives and limited sample sizes.

As for business objectives, the line of inquiry you are suggesting for the test (while interesting) would have cost a significant amount of resources with a potentially limited payoff in what we call “customer wisdom.” By using the data we generated from the test in conjuction with our library of over 1,000 similar experiments across different demographics and industries, we can make a fairly educated guess that the images likely had a large impact on the performance of the page.

Because both the treatments with images decreased conversion, the team made a business decision in light of the information they had (limited as it was) not to pursue further testing in this vein, but rather pursue other more important testing questions.

As for sample sizes, every test we run at MECLABS is designed to run the maximum number of treatments the projected sample size will allow to validate in a reasonable time. More treatments would have meant a larger sample of visitors was required to validate the test. In a business environment where you are losing money every day on an underperforming page, we do not have the luxury of running a test for months or years, simply to gain a “potentially” useful insight about the customer.

We are constantly looking for the minimum effective dose required to gain the maximum customer insight. In this case, the test you see above is what the team landed on when considering all of the constraints of testing in a real business environment.

Now, with all of that said, we may have been wrong about the tradeoffs we needed to make to accomplish our objective in the test. There is always a potentially better line of inquiry, or better idea to test. But putting myself in the shoes of the business leader in this case, I would rather have a good test now, than a perfect test in a year.

I hope this helps. Please feel free to reach out if there’s anything else I can help you with.

@shannon smith

Another thing to note here (in case it’s not clear in the post) is that when we compared the two treatments with images of people, there was no statistically significant difference in performance. So, in a sense, there is a test inside of the test that changed a minimum number of variables and we were able to draw more accurate conclusions around whether different images in the same style had an impact on conversion. We found that they did not.

Does this post have a part 2 with further test already conducted?

Unfortunately there was no follow up test. Testing priorities were shifted to other parts of the site.