I want everything. And I want it now.

I’m sure you do, too.

But let me tell you about my marketing department. Resources aren’t infinite. I can’t do everything right away. I need to focus myself and my team on the right things.

Unless you found a genie in a bottle and wished for an infinite marketing budget (right after you wished for unlimited wishes, natch), I’m guessing you’re in the same boat.

When it comes to your conversion rate optimization program, it means running the most impactful tests. As Stephen Walsh said when he wrote about 19 possible A/B tests for your website on Neil Patel’s blog, “testing every random aspect of your website can often be counter-productive.”

Of course, you probably already know that. What may surprise you is this …

It’s not enough to run the right tests, you will get a higher ROI if you run them in the right order

To help you discover the optimal testing sequence for your marketing department, we’ve created the free MECLABS Institute Test Planning Scenario Tool (MECLABS is the parent research organization of MarketingExperiments).

Let’s look at a few example scenarios.

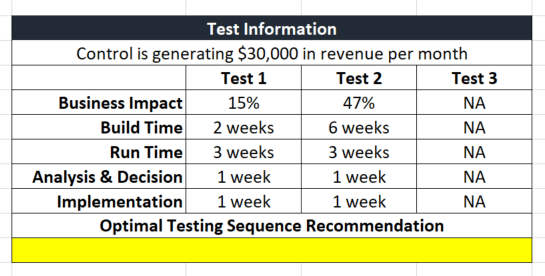

Scenario #1: Level of effort and level of impact

Tests will have different levels of effort to run. For example, it’s easier to make a simple copy change to a headline than to change a shopping cart.

This level of effort (LOE) sometimes correlates to the level of impact the test will have to your bottom line. For example, a radical redesign might be a higher LOE to launch, but it will also likely produce a higher lift than a simple, small change.

So how does the order you run a high effort, high return, and low effort, low return test sequence affect results? Again, we’re not saying choose one test over another. We’re simply talking about timing. To the test planning scenario tool …

Test 1 (Low LOE, low level of impact)

- Business impact — 15% more revenue than the control

- Build Time — 2 weeks

Test 2 (High LOE, high level of impact)

- Business impact — 47% more revenue than the control

- Build Time — 6 weeks

Let’s look at the revenue impact over a six-month period. According to the test planning tool, if the control is generating $30,000 in revenue per month, running a test where the treatment has a low LOE and a low level of impact (Test 1) first will generate $22,800 more revenue than running a test where the treatment has a high LOE and a high level of impact (Test 2) first.

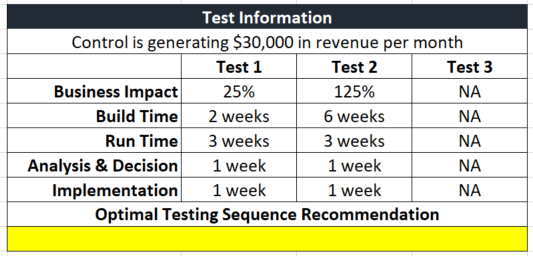

Scenario #2: An even larger discrepancy in the level of impact

It can be hard to predict the exact level of business impact. So what if the business impact differential between the higher LOE test is even greater than in Scenario #1, and both treatments perform even better than they did in Scenario #1? How would test sequence affect results in that case?

Let’s run the numbers in the Test Planning Scenario Tool.

Test 1 (Low LOE, low level of impact)

- Business impact — 25% more revenue than the control

- Build Time — 2 weeks

Test 2 (High LOE, high level of impact)

- Business impact — 125% more revenue than the control

- Build Time — 6 weeks

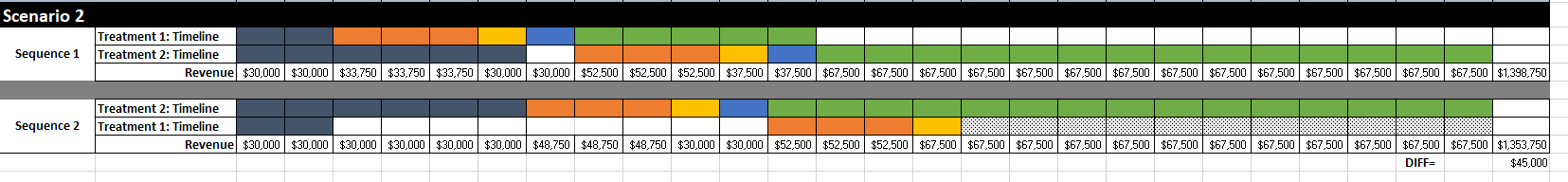

According to the test planning tool, if the control is generating $30,000 in revenue per month, running Test 1 (low LOE, low level of impact) first will generate $45,000 more revenue than running Test 2 (high LOE, high level of impact) first.

Again, same tests (over a six-month period) just a different order. And you gain $45,000 more in revenue.

“It is particularly interesting to see the benefits of running the lower LOE and lower impact test first so that its benefits could be reaped throughout the duration of the longer development schedule on the higher LOE test. The financial impact difference — landing in the tens of thousands of dollars — may be particularly shocking to some readers,” said Rebecca Strally, Director, Optimization and Design, MECLABS Institute.

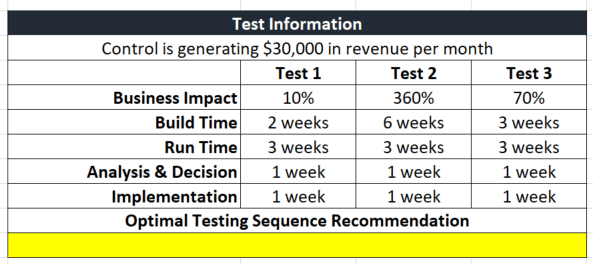

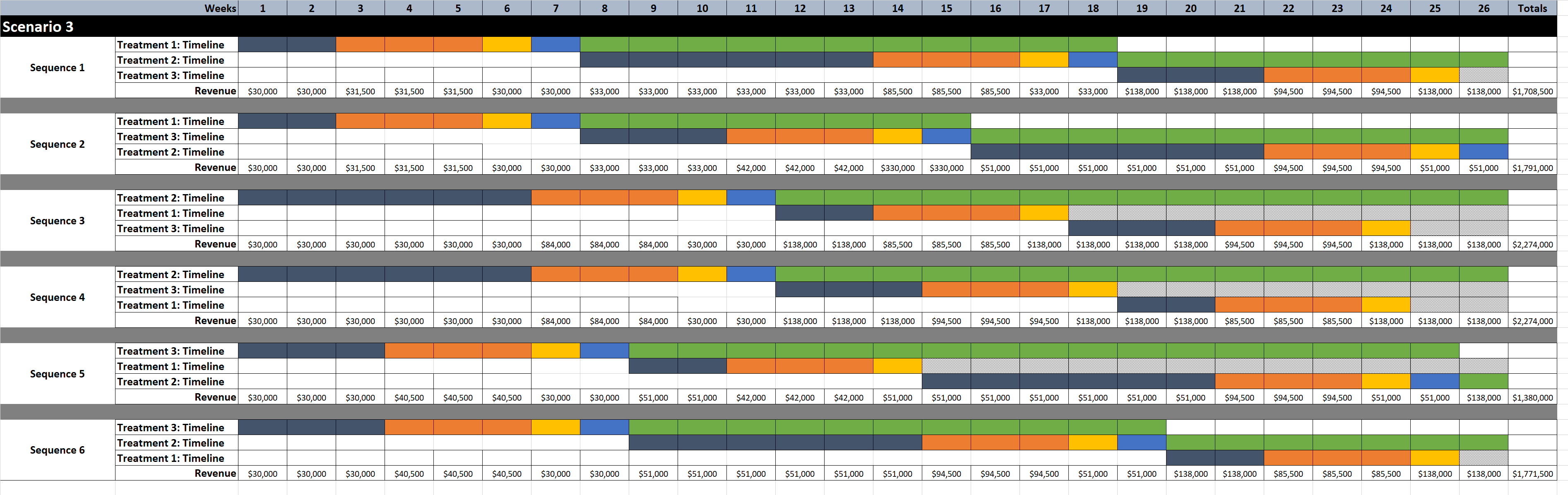

Scenario #3: Fewer development resources

In the above two examples, the tests were able to be developed simultaneously. What if the test cannot be developed simultaneously (must be developed sequentially) and can’t be developed until the previous test has been implemented? Perhaps this is because of your organization’s development methodology (Agile vs. Waterfall, etc.), or there is just simply a limit on your development resources. (They likely have many other projects than just developing your tests.)

Let’s look at that scenario, this time with three treatments.

Test 1 (Low LOE, low level of impact)

- Business impact — 10% more revenue than the control

- Build Time — 2 weeks

Test 2 (High LOE, high level of impact)

- Business impact — 360% more revenue than the control

- Build Time — 6 weeks

Test 3 (Medium LOE, medium level of impact)

- Business impact — 70% more revenue than the control

- Build Time — 3 weeks

In this scenario, Test 2 first, then Test 1 and finally Test 3, along with Test 2, then Test 3, then Test 1 were the highest-performing scenarios. The lowest-performing scenario was Test 3, Test 1, Test 2. The difference was $894,000 more revenue from using one of the highest-performing test sequences versus the lowest-performing test sequence.

“If development for tests could not take place simultaneously, there would be a bigger discrepancy in overall revenue from different test sequences,” Strally said.

“Running a higher LOE test first suddenly has a much larger financial payoff. This is notable because once the largest impact has been achieved, it doesn’t matter in what order the smaller LOE and impact tests are run, the final dollar amounts are the same. Development limitations (although I’ve rarely seen them this extreme in the real world) created a situation where whichever test went first had a much longer opportunity to impact the final financial numbers. The added front time certainly helped to push running the highest LOE and impact test first to the front of the financial pack,” she added.

The Next Scenario Is Up To You: Now forecast your own most profitable test sequences

You likely don’t have the exact perfect information we provided in the scenarios. We’ve provided model scenarios above, but the real world can be trickier. After all, as Nobel Prize-winning physicist Niels Bohr said, “Prediction is very difficult, especially if it’s about the future.”

“We rarely have this level of information about the possible financial impact of a test prior to development and launch when working to optimize conversion for MECLABS Research Partners. At best, the team often only has a general guess as to the level of impact expected, and it’s rarely translated into a dollar amount,” Strally said.

That’s why we’re providing the Test Planning Scenario Tool as a free, instant download. It’s easy to run a few different scenarios in the tool based on different levels of projected results and see how the test order can affect overall revenue. You can then use the visual charts and numbers created by the tool to make the case to your team, clients and business leaders about what order you should run your company’s tests.

Don’t put your tests on autopilot

Of course, things don’t always go according to plan. This tool is just a start. To have a successful conversion optimization practice, you have to actively monitor your tests and advocate for the results because there are a number of additional items that could impact an optimal testing sequence.

“There’s also the reality of testing which is not represented in these very clean charts. For example, things like validity threats popping up midtest and causing a longer run time, treatments not being possible to implement, and Research Partners requesting changes to winning treatments after the results are in, all take place regularly and would greatly shift the timing and financial implications of any testing sequence,” Strally said.

“In reality though, the number one risk to a preplanned DOE (design of experiments) in my experience is an unplanned result. I don’t mean the control winning when we thought the treatment would outperform. I mean a test coming back a winner in the main KPI (key performance indicator) with an unexpected customer insight result, or an insignificant result coming back with odd customer behavior data. This type of result often creates a longer analysis period and the need to go back to the drawing board to develop a test that will answer a question we didn’t even know we needed to ask. We are often highly invested in getting these answers because of their long-term positive impact potential and will pause all other work — lowering financial impact — to get these questions answered to our satisfaction,” she said.

You might also like …

MECLABS Institute Online Testing on-demand certification course

Landing Page Testing: Designing And Prioritizing Experiments

Great article! I read your blog fairly and most of the time you are coming out with some great stuff and Thanks for sharing such great information. Keep up the immense work.

I really appreciate you for sharing this wonderful testing outcome. I want to ask, how will this model work for a startup eCommerce business? I am eager to hear from you. Thanks for sharing!

Victor Rooney,

The process would be the same, there is just more guesswork involved in what the control could generate in revenue (if it isn’t generating revenue yet), what the business impact will be, etc. It’s a good exercise though to have to think through these elements of your business. And the more and longer you do it, the tighter those estimate will get. The effort estimates will also help you get a handle on your resources and ability to execute. The run time estimates may necessitate buying traffic (e.g. through PPC ads, etc) if you don’t have your own traffic organically.

For anyone thinking of launching a startup ecommerce business, here is an article that may help: https://marketingexperiments.com/value-proposition/entrepreneur

A/B Testing has really proven o be a ni in the Iceberg, it has really help me understand what my customers want.

Your article is what i am be searching for long time

I’ve never applied A/B testing in my campaigns. I will look into using it so see how I can drive sales.

Interesting! Good article on A/B Testing.

This is actually what i needed, Thanks for sharing.

You actually make it seem so easy with your presentation but I

find this topic to be really something that I

think I would never understand. It seems too complicated and extremely broad for me.

I’m looking forward for your next post, I will try to get the hang of it!

Fantastic!

Thanks for sharing this amazing post. I have read your article and found many important information related to A/B testing.

Great Stuff on A/B Testing.

I really appreciate you for sharing this wonderful testing outcome. I want to ask, how will this model work for a startup eCommerce business? I am eager to hear from you. Thanks for sharing!

Same as with any other business – this model can help you prioritize your marketing tests to get the most impact. If you’re interested in running marketing tests at an ecommerce business, this article has a good example: https://marketingexperiments.com/a-b-testing/ecommerce-value-proposition

I’ve never applied A/B testing in my campaigns. I will look into using it so see how I can drive sales.