Most marketers are afraid to use the word “FREE” (especially in all caps) in their emails because they’ve heard it tends to trigger spam filters. When that happens, it’s generally a bad thing as less of your emails get read. No emails getting read means no offers being viewed. That’s BAD. Right?

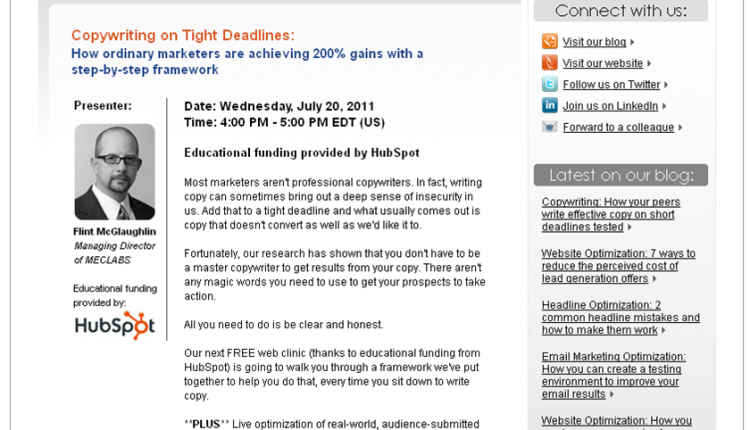

Well as the Junior Editorial Analyst here at MECLABS, MarketingExperiments’ parent company, I’m charged with sending out emails to our MarketingExperiments list of about 100,000 marketers around the globe. That means I also get to test just about anything I want in an email to probably most of the people reading this blog post.

Because of the hot debate around this issue, I knew I wanted to test the word “FREE” in an email to see what it did.

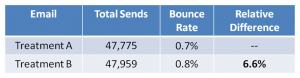

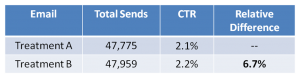

It turns out, the word “FREE” in the body of my Web clinic email [could have (see comments below)] hurt deliverability by 6.6%. But despite a higher bounce rate, I still got a higher clickthrough rate.

Here’s the deliverability statistics on the two emails:

Treatment A:

Treatment B:

The Bounce Rate Results:

What you need to understand: PART 1

In this case, it would appear that using the word “FREE” in the body of an email can hurt your deliverability. But also in this case, deliverability isn’t our main KPI—it’s clickthrough rate.

With that in mind, I examined the results of the clickthrough rate compared to the total sends.

The Clickthrough rate results

What you need to understand: PART 2

When I measured the clickthrough rate against the total attempted sends, I still got a 6.7% increase in total clicks. In other words, taking into account the number of bounces the word “FREE” caused, email treatment B still won by a statistically significant margin.

How to test “spammy” words in your own emails

We already know that clarity trumps persuasion. What we didn’t know was that clarity trumps even email deliverability. According to this test, it would seem that simply stating that the Web clinic was free and being a little more specific in our wording about what was on the other side of the click, we got better results than when we tried to avoid the word “FREE.”

However, just because it worked for the MarketingExperiments audience doesn’t necessarily mean it will work for yours. Here’s a few tips for testing “spammy” words in your own email campaigns.

- Always be clear. Don’t just test words like “free” because you heard on this blog that you can get a higher click-through-rate with it. Be clear first. If there is a clearer way to say something without saying “free,” say it.

- Make sure your results are valid. With smaller margins of difference, you could be making a big mistake assuming your numbers are statistically significant when they aren’t. Rule out other validity threats as well.

- Measure the clickthrough rate and the bounce rate relative to the total number of attempted sends. This one seems like a no-brainer but I almost made the same mistake myself when I was crunching the numbers. You’d be surprised how silly mistakes like this can totally ruin your view of a test.

What’s your experience with “spammy” words in emails? Do you find they help more than they hurt? Vice versa? Tell us about it in the comments…

Related Resources:

Email Marketing Optimization: How you can create a testing environment to improve your email results

Graphic vs. Text Emails: How differing approaches encouraged future testing

This research would have had much greater impact had the word FREE also been tested in the subject line. Seems to me in this age of smartphones a 6 % jump in clicks is irrelevnt if open rates are depressed by spammy words in the subject.

I don’t think that you can rightfully claim a 0.1% difference in bounce rates between the two messages was caused soley by the use of the word “free” in the body of a message – not even with it in all CAPS.

It’s much too simplistic a claim that only perpetuates the myth that this, that, or another word is solely responsible for blocking, bouncing or true deliverability. Besides, bounced messages aren’t an indicator of Inbox deliverability anyway, so the claim is false on its face….

@John Caldwell

Hi John,

I should have mentioned in the post that it was in fact a statistically significant difference. Because of the amount of sends, we were able to get a more granular view of the results.

I’m going to try to get someone on our sciences team on here to explain it better.

As far as whether bounce rates are an indicator of inbox deliverability, I honestly just assumed they were. Can you clarify what you mean?

Hey Paul,

Let’s start with the easy stuff; deliverability is Inbox measurement, not acceptance by the receiving server.

The options of a receiving server are to ignore (blakchole), reject (bounce), or accept (deliver to account) an inbound message. Delivery to the account doesn’t mean delivery the Inbox (deliverability); there are more steps.

Deliverability as an aggregate doesn’t really mean much if it’s not weighted to the sender’s database to be significant to them; so there’s that variable that could get in the way….

Paul, is it fair to say “hurt deliverability by 6.6%”? The 6.6% is the increase in bounces, not the decrease in emails accepted by the destination. The number of emails that did not bounce dropped from 99.3% to 99.2%. Weren’t delivery numbers hurt by just over 0.1%?

There may be (or not) more delivery problems going on later in the delivery chain, as John says. Mail that does not bounce can still get quietly deleted before delivery or rerouted to spam/junk folders rather than the inbox.

@John Caldwell

@Mark Brownlow

In the words of The Captain, “What we’ve got here is… failure to communicate.”

What’s worse is it’s MY fault.

Deliverability in a strict sense of the word, does in fact mean more than simply “bounce rate.” There’s a lot that goes into the “deliverance” of an email than just not bouncing.

For assuming “bounce rate” was synonymous with “deliverability,” I’m sorry. I was wrong. 🙁

My only intention for the post was to encourage marketers who may be worried about triggering spam filters with so-called “spammy words,” to reconsider avoiding them altogether when they could add clarity to the offer.

That said, both of you brought up the point that delivery rates dropped only by 0.1% and so there was some question as to whether that was actually significant.

The answer is simply–yes. It is significant. If you would like to check the math, here’s a few resources that support it:

Marketing Optimization: How to determine the proper sample size

Online Testing and Optimization: ROI your test results by considering effect size

Online Marketing Tests: How could you be so sure?

Online Marketing Tests: How do you know you’re really learning anything?

Essentially we aren’t really worried about the ABSOLUTE difference in bounce rate (the 0.1%) we’re worried about RELATIVE difference (the 6.6%).

As long as that relative difference is statistically significant (for us this means at least a 95% level of confidence) then we can be pretty sure that if sent over and over again the email with the term “FREE” in it would have consistently bounced more often than the one without the term “FREE.”

If we had continued to send that email hundreds of thousands of times, that 0.1% would have translated to hundreds more bounced emails. In that sense, 0.1% is definitely significant.

Now, on to the issue of bounce rate…

Is bounce rate the only factor in email deliverability? No. Does it hurt deliverability? As far as I know, bounces are still bad. It means that an email message isn’t getting delivered.

But I could still be wrong about that.

And that’s where my post failed…I used the wrong metric. In an ideal world what I should have used (as our sage Data-Analyst Phillip Porter pointed out) was number of bounces caused by spam filters.

Of course, because of limitations in technology, we simply can’t measure that. However, what I could have used to get a little bit better picture of deliverability was open rate.

Everything in both emails was the same except for the call to action. That means that the only thing that would have significantly affected open rate would have been actual deliverability.

So with that said, I’m going back to the drawing board on this one to see if open rates were affected by the call to action. I’ll add a comment here when I find out.

Thank you both so much for your comments. This is a great example of the power of the social web driving the synthesis of ideas (even if they are just between us). Hegel would be proud 🙂

@Mark Brownlow You know, based on the data presented an assumption could be made that messages not containing the word FREE were filtered to junk/spam folders and that’s why the ones containing FREE got a higher click through…. I’m just sayin’…. 🙂

@Paul Cheney I don’t disagree with the position that people shouldn’t get hung up on “spammy” words.

I should disclaim that for several years I made many millions of dollars in the email channel for a company with “free” in their name. Another word in the company’s name was also deemed, “spammy” by pundits with opinions not formed in experience; and my deliverability – Inbox penetration – was in the mid 90s.

That said, bounces come in two flavors; hard and soft, and with a variety of toppings. Unless you know when someone’s Inbox is going to be full, or any of the other soft bounce toppings, you’re trying to pin statistical accuracy on a moving target.

The only consistent “bounce” control group you can have are hard bounces, and since you should never send to a hard bounce a second time, it doesn’t really matter…. You could send hundreds of billions of times and the word “FREE” will still have no impact on bounced messages.

A bounce rate isn’t a factor in deliverability for in order to be delivered to even a junk folder the message has to be accepted. A bounced message was not accepted but rejected. Received does not equal accepted nor delivered to any account folder.

How can you say that the only thing that can impact an open rate is deliverability when years of studies have shown an increase in the value of a recognizable Sender Name over the Subject Line? 70% of recipients look first for a recognized sender, then to the subject line (30%) to decide if they’ll open a message.

Now, if the message never makes it to the Inbox then the recipient never sees the sender or subject, so deliverability – of accepted – messages matters.

Your open rates will never be affected by your CTA unless you’re mailing to authentic psychics, and I’m not sure how you’d know; although I would expect them to. But I digress….

Here’s the order:

1. A recognized Sender Name and compelling Subject Line cause Opens

2. A compelling value proposition and CTA cause Clicks

3. Conversions happen on the landing page.

So, I would guess that more of your readers found FREE to be more compelling than the alternative in your test, but what does that mean to the marketer, and how will they translate your findings to their boss in order to get executive buy-in?