One of the biggest problems our audience tends to struggle with understanding is – what do their tests actually mean? And sometimes, frankly, they see a result, any result, and are overly confident about what they’ve learned from it.

One of the biggest problems our audience tends to struggle with understanding is – what do their tests actually mean? And sometimes, frankly, they see a result, any result, and are overly confident about what they’ve learned from it.

So recently, here on the MarketingExperiments blog, we discussed statistical significant and validity as well as confidence and probability.

When he read those posts, MECLABS Data Analyst, Phillip Porter made a good point, “Significance just tells us if there’s a difference, not if it’s important.”

Since Phillip dives into data like Greg Louganis off a springboard, I wanted to find out more…and learned a lot from him in the process (Phillip, not Greg Louganis). Let’s begin by backing up a bit…

How to ensure you gain business intelligence from your tests

Determining if you can learn something from your tests isn’t as simple as dropping the results into an online testing platform’s statistical blender and asking, “Does it validate?” You must ensure you understand these four elements for your tests:

- Reliability – you get the same answer every time

- Validity – you get a right answer (i.e., the answer you get accurately reflects reality)

- Statistical Significance – that answer is different from the other possible answers

- Effect Size – that answer is different by enough to be worth investing in its implementation

So, once you’ve got the first three down cold, you know you’ve actually learned something. But is it worth acting upon…

Effect size

Effect size is a relatively emerging practice in statistics (since the 1980s or so), particularly in social science research, but is starting to become the standard. The Publication Manual of the American Psychological Association, 5th edition, refers to research that doesn’t report effect sizes as “inferior.” Phillip’s background is in education research, so he is particularly enamored with the topic. But how does it affect your business? He explains it this way…

“In terms of size, what does a 0.2% difference mean? For Amazon.com, that lift might mean an extra 2,000 sales and be worth a $100,000 investment,” Phillip said. “For a mom-and-pop Yahoo! store, that increase might just equate to an extra two sales and not be worth a $100 investment.”

“Of course,” Phillip went on to say, “Those two sales might be worth a few million dollars each to a B2B site.”

In other words, degree matters. As I said in those previous posts, you simply can’t be looking for a yes/no answer. You must understand not only what the result is, but how it will impact your business to understand if it is worth making an investment based on your test.

Traffic matters

But traffic matters, too….

“Effect size becomes more important when we are dealing with tests that have unusually low or high power (in our usage these would be tests with low traffic or high traffic),” Phillip said. “Low traffic tests won’t validate, even with a large enough effect size to be worthwhile. High traffic tests can validate even when the effect size is so small as to be useless.”

“Every test will reach significance if we let it run long enough, this is one of the reasons for the sample size calculation, it gives us the minimum sample size at which we can expect to see significance, if we get to that point and keep running the test, we increase our chances of finding significance, but decrease the value of that finding.”

So, while accurate testing relies on mathematics, successful testing needs some help from the business realm as well. Statistics will eventually give you an answer, but is it an answer that will help your bottom line?

How to use effect size to affect your bottom line

Even if you can’t get all the complex math down right, a basic understanding of effect size can help guide you as you try to profit from your tests.

Essentially, you must make sure the additional revenue justifies any additional costs. Sounds pretty obvious, right? But it’s all too easy for a company to chase the revenue gain and lose sight of the overall ROI when they see a successful test.

Our researchers think about effect size before the test is even run. For example, in the MECLABS Test Protocol, they must answer the question, “What is the minimum difference you’re willing to accept?” By having to answer this question, they are forced to factor in the ROI of any potential optimization changes. Again, this is a business decision, not something your data analysts should answer for you.

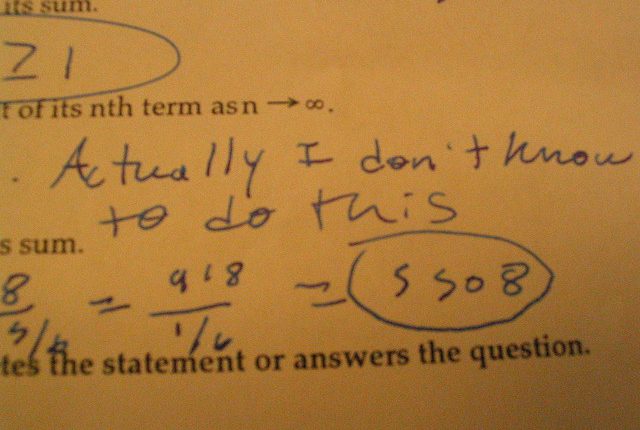

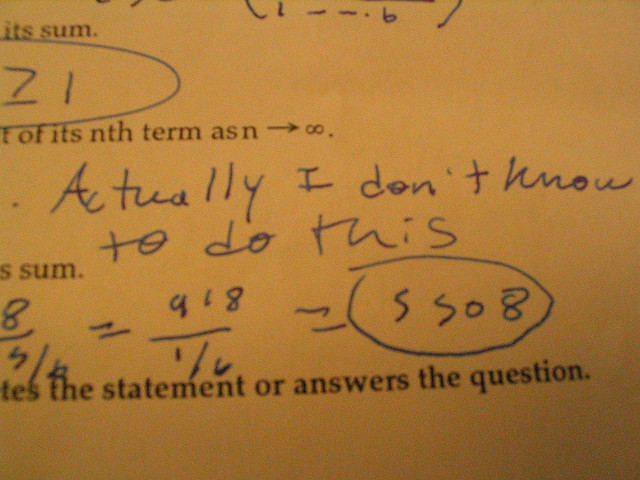

So, no copying off the math whiz. This is homework that only you can complete.

Related resources

Online Marketing Tests: How do you know you’re really learning anything?

Online Marketing Tests: How could you be so sure?

Why use Effect Sizes instead of Significance Testing in Program Evaluation?

The Fundamentals of Online Testing online training and certification course

Photo attribution: attercop311