At the end of March, HubSpot, the marketing software company, held a webinar on “The Science of Timing.” The event drew almost 25,000 registrants and ended up with very close to 10,000 attendees. Driving this success was a fast-paced and extensive email campaign sent to different segments of HubSpot’s list, prospects and new leads.

“The Science of Timing” was hosted by Dan Zarrella, HubSpot’s Social Media Scientist, and was built on a previous series of webinars over the last two years covering topics such as “The Science of Twitter,” “The Science of Blogging,” “The Science of Facebook,” and more. “The Science of Timing” covered when to do these social activities — when to tweet when seeking a retweet, when to publish new blog posts, when to update a Facebook status.

Subject line testing

HubSpot regularly conducts testing and optimization, and for this send it tested the subject line.

Eric Vreeland, Inbound Marketing Manager, HubSpot, explains the reason for this test, “Our goal is to generate the most amount of leads possible. Because our email list is so large, a 0.1% increase in open or clickthrough can result in a large number of form conversions and leads. We opted to test subject lines in order to maximize the total conversions we got for all our promotional efforts.”

To create a mailing list for the test, HubSpot randomly pulled about 50,000 addresses from its total list of around 500,000. This subgroup was randomly broken into five groups, and each group was sent mail with the exact same copy:

And all five groups were sent to the landing page after clicking through:

All five sends went out simultaneously at 10:00 a.m. The total list size was 54,987.

Results

Group 1

- Subject line: [New Webinar] What time should you blog, tweet, and email?

- Total delivered: 11,037

- Total opens: 1,534

- Total clicks: 245

- Open rate: 13.90%

- Clickthrough: 2.22%

Group 2

- Subject line: [New Data] The Science of Timing. When you should tweet, blog, email, and more

- Total delivered: 10,996

- Total opens: 1,562

- Total clicks: 247

- Open rate: 14.21%

- Clickthrough: 2.25%

Group 3

- Subject line: [New Webinar] The best times to do your online marketing

- Total delivered: 10,924

- Total opens: 1,506

- Total clicks: 214

- Open rate: 13.79%

- Clickthrough: 1.96%

Group 4

- Subject line: [New Webinar] Learn the science of timing your blog posts, tweets, emails and more

- Total delivered: 11,102

- Total opens: 1,488

- Total clicks: 247

- Open rate: 13.40%

- Clickthrough: 2.22%

Group 5

- Subject line: Learn the best days and times to blog, email, tweet and more

- Total delivered: 10,928

- Total opens: 1,414

- Total clicks: 226

- Open rate: 12.94%

- Clickthrough: 2.07%

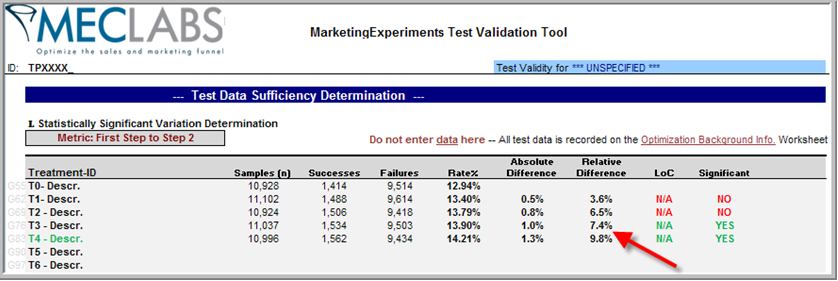

Analysis

We ran the numbers through the MECLABS Test Validation Tool (part of the MECLABS Test Protocol that our research analysts use with Research Partners). Since the tests weren’t performed in our own labs, we weren’t able to check for possible validity threats and had to assume the data was normally distributed. After making those assumptions, we found that the two best performing treatments showed a statistically significant difference for open rate from the worst-performing treatment at a 95% Confidence Level — Group 2 and Group 1 outperformed Group 5.

Group 2 provided a 9.8% life and Group 1 provided a 7.4% lift over the control.

Why? Well, obviously this is only one test. To truly find the answer would require analyzing HubSpot’s entire testing-optimization cycle But if one were to speculate, these were the only two treatments with a solid promise (of data or a webinar) AND used one of the Five Ws as we call them in journalism (in this case, What and When) to entice the subscriber and let them know that opening this email will begin them on a path that ends with the answer to one of their questions.

That’s just my take. I would love to hear your analysis as well. And here’s what Vreeland had to say about the top performer …

That was the only subject line that opened with “[New Data],” and Vreeland thought that could explain the better result.

He says, “Dan (Zarrella) is known for his interesting analysis of a wide variety of data. It makes sense that those interested in one of Dan’s webinars would be someone who is also excited by the prospect of new data.”

Vreeland adds, “The purpose of the test was to determine the best performing subject line so we could use it on the remainder of our 500,000 email list.”

Each of the top two performing subject lines were used in later email sends during the campaign.

Related Resources

New to B2B Webinars? Learn 6 steps for creating an effective webinar strategy

Whilst there was a clear winner, the difference in performance was respectable but not huge.

I would say the subject lines were just all too much the same. Very often bigger differences in performance need a totally different approach. I’ve found this can give 30%+ differences.

Tim,

Thanks for the comment. In this case HubSpot was looking for small, incremental increases in opens and clickthroughs. Because its list is huge, any increase creates a large number of leads.

Here’s HubSpot’s Vreeland from the post, “Because our email list is so large, a 0.1% increase in open or clickthrough can result in a large number of form conversions and leads”

Can you control for people like me who open any and all emails having to do with MECLABS and Hubspot?

The testing aspect of subject lines is great, but I’d really like to see analysis of the types of offers that truly drive traffic. Are white papers more effective than webinars? How do informational incentives compare to finacial offers such as discounts and free samples? I would assume the latter would be more effective, but that may not be true in email behavior.

Just wondering is #1 would have been higher had you included the “and more…” at the end of the subject line (which are on Groups 2, 4 and 5).

Tim Watson made a point about the subject lines being too similar. But there’s still value in using a test. Haven’t you ever carefully crafted a subject line – only to have a client or other colleagues try to wordsmith it to death? It’s frustrating when you just innately know that the changes have less power. Running a test to show the winner is more cost-effective, saves face and wins friends!

Amy,

I posed your question to our Director of Sciences, Bob Kemper, and here’s what he had to say…

Thanks for your question, Amy. You’ve raised an important element of what drives Email Open rates. Since your (gratifying) comment suggested that you’re already familiar with MECLABS material and principles, you may remember that the factors that affect Open rate include such items as Relevance (to a recipient’s innate and current motivations), attributes of the Subject-line copy such as Urgency and Specificity, etc. Your question touches on the element of Relationship. The probability that an email recipient will open a message is strongly influenced by her perception of the email sender. That’s a key reason that the highest Open-rates by far typically come from a business’ own House-list.

So, instead of something to be ‘controlled’ for per-se, ‘relationship’ is one of the attributes you should use to characterize and manage your email lists and your sends, so you can craft and customize your messages and calls-to-action accordingly.

Thanks again for your insightful question, Amy.

All the best,

Bob

Kevin,

Wouldn’t it be great if there were one right answer to those questions? The truth is, we each have to test to see what works best for our audience right here and right now. If you have a complex B2B sale, for example, and a very well-respected brand, information may be extremely valuable to that audience. However, if you have a B2B site selling a very straightforward, basic product — the discount may work better.

We’re constantly trying to explore what really works for other marketers to give you ideas for your own tests. Here are two examples from the paid membership library at our sister site, MarketingSherpa, that you may find helpful …

https://www.marketingsherpa.com/article.php?ident=31672

“Webinar Promotion that Delivers: Use Email, Social, Viral Referrals and Video to Boost Attendance, Drive Lead Gen”

http://www.marketingsherpa.com/content/?q=node/2677

“How to Get Thousands More White Paper Readers & Webinar Attendees: Red Hat’s Initial Test Results”

Very interesting article. Thanks for publishing. I agree with Tim Watson about the subject lines. They are too similar in format, structure, voice, curiosity level, hook, etc.

I like to test completely different angles in my subject lines. It’s tough to find out what will capture someone’s attention and interest in today’s over-crowded in-boxes.

Speaking of curiosity, I wonder how other, less obvious, variables might perform in a test for Hubspot beyond the simple subject line modifications.

Is list degradation a factor for them? Does the average length of time a subscriber has been on their list affecting engagement?

Is the time of day, 10am vs. 6pm, an factor for Hubspot’s to consider?

As usual, there’s no universal rule for email. Sometimes it matters and other times not at all. And then it changes in 6 months. Testing rocks.

David, how good is that to send emails unanimously to email addresses we have in database? Secondly, mails sent from Hubspot or any other do they land in spam box? If they do land don’t you think emails will reach to only small number. I am not sure If i have expressed my views correctly, but I am sure you must have got it.

Thanks for this great analyzed report.

Vinaykyaa,

Thank you for the question. I’m not certain I follow what you are asking, but I believe it is, “does sending email to the entire list result in more mail getting caught in a spam filter?”

If you have good data hygiene and keep your list updated by regularly responding to unsubscribe requests and checking for bounces, an email send to an entire list shouldn’t in and of itself lead to more email being trapped by spam filters. Now the subject line or some aspect of the mail content could certainly contribute to that issue.

Data hygiene in the database is always important, and obviously the larger the list — like HubSpot’s — the bigger the challenge in keeping that email list up-to-date and clean.

Great post about a good test! The devil is in the detail when it comes to people making microsecond decisions about whether to click to open and then to click through.

Looking at timing across the globe is also fascinating.

Hi, I want to note that link 2 and 3 are not working in the related resourse section of this page.

Best Regards,

Michael

Same here…would have liked to see more variation in the subject lines, in addition to their “looking for small, incremental increases in opens and clickthroughs. “. It would have given more credibility to their test.

Interesting post.

I think the reason Group 1 and Group 2 did the best is because of the use of the phrases “when should” and “what time should”. Those phrases are very direct and simplify an understanding of the new data being offered. Compared to “learn the science of timing” which could mean “when to” but could also mean “learn the process of discovering when to…” I bet HubSpot’s audience would prefer to just have the guideline to follow (as in ‘tweet between 4:30 and 5:30’) than a research process for which they have to do actual work.

Fascinating stats. I’ve always found that [New Details] and other bracketed text at the beginning gave a marginally better performance, too.

Hi Michael,

Thanks for pointing that out. The links have now been fixed.

I’ll try to use this as a model: “The Science of Timing. When you should tweet, blog, email, and more” not exactly that but similar, it’s now in my swipe file :). First a statement. Then the question. Also if you have a very big list, say 40,000 a 1% increase in CTR is quite significant still.