We usually share tests on this blog that our optimization research analysts conduct in our labs with our Research Partners. Sometimes we share tests from our audience is well, but rarely do we share our own tests.

In today’s blog post I wanted to share a recent email test from our own marketing team. Not because the results were impressive. If you’ve followed MarketingExperiments for any time, you’ve certainly seen us share results from 162% lifts and 59% gains. Today, we’re going to discuss what to do when you get something entirely different.

BACKGROUND

MarketingExperiments and MarketingSherpa are co-hosting the first-ever Optimization Summit. We thought it would be fun to pit one of our top marketers, Senior Marketing Manager Justin Bridegan, against one of our top optimizers, Senior Optimization Manager Adam Lapp. An A/B split test. Head to head. Here are the treatments they created…

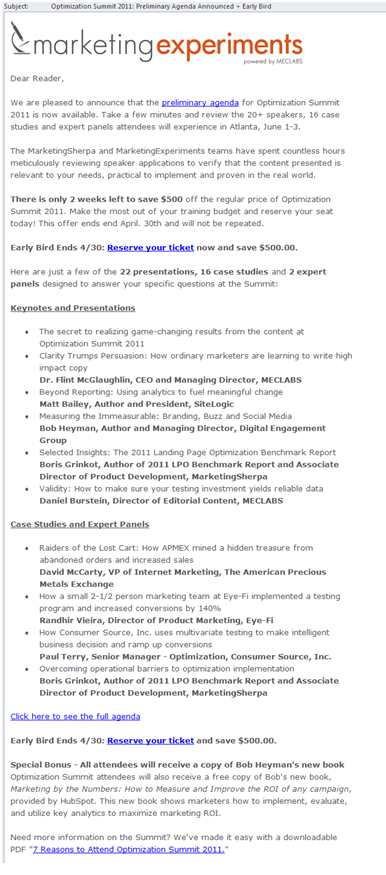

TREATMENT A

We recently released the agenda for Optimization Summit, and that was the main thrust of Justin’s email – details about the speakers. Personally, I loved it. Hey, it had my name in there. (What would you like to learn about validity at Optimization Summit? Let me know.)

It also included an offer with a call to action to save $500 if you buy a ticket by April 30th.

Lastly, it included an incentive. All attendees will receive a free copy of keynote speaker Bob Heyman’s new book, “Marketing by the Numbers: How to Measure and Improve the ROI of any campaign,” courtesy of premier sponsor HubSpot.

“The purpose of this email went beyond just getting a click, it was also to show the breadth and depth of the upcoming Optimization Summit by including some of the agenda,” Justin said.

“At certain times in a marketing campaign you need to go beyond the basic information or selling points and give the audience the real value or ‘meat’ they are looking for. With the agenda being released, this seemed to be a prime opportunity to give them more.”

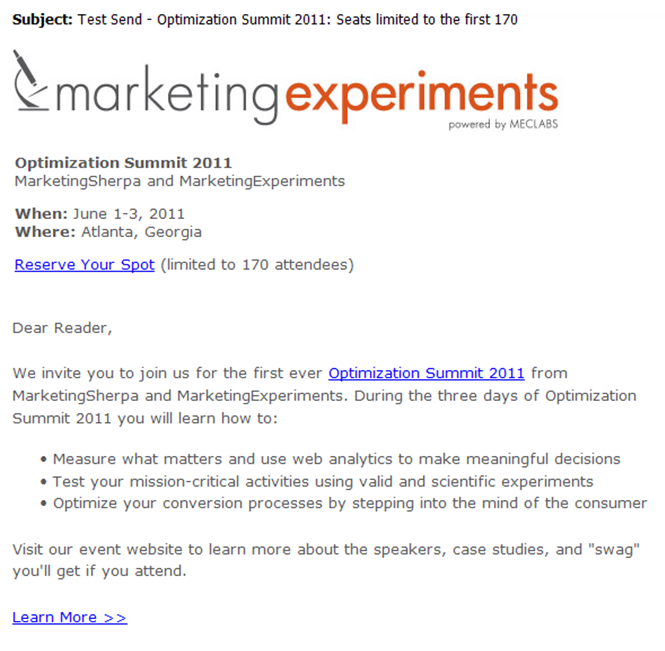

TREATMENT B

Adam’s approach was different. He was just trying to get the click. He immediately states what the reader is looking at – an invite to an event being held June 1-3 in Atlanta. He also plays up the limited nature of the opportunity – we will only be selling 170 tickets. And he does it so quick. Justin was spinning a yarn like a storyteller; Adam was trying to get in and out quick like a ninja.

RESULTS

Now let’s take a look at the results…

Well, there were no results. And by that I mean, when our Database and Analytics Specialist, Zlatko Papic, sent me the metrics and we ran them through the MECLABS Test Protocol, we were not able to identify a statistically significant difference in clickthrough rate between the two treatments.

At first blush, this is quite surprising. After all, these are two radically different approaches. Shouldn’t there be an equally radically different result? Why wasn’t there?

Here’s my guess. As Dr. Flint McGlaughlin says, “High motivation will overcome a bunch of sins.”

In other words, our audience is just so darn interested in the Optimization Summit, it almost doesn’t matter what treatment we put in front of them.

For example, if you were sending a credible email from a credible brand offering a truly free iPad to your email list, you almost wouldn’t need to say anything. Ours is an audience of optimization experts that has come to rely on MarketingExperiments for quality testing and optimization information for more than ten years. It makes sense they’d be interested in our first-ever Optimization Summit.

In fairness, on the flip side, the product could be so bad, have so little value that your marketing treatments may have no impact either. Let’s just hope that’s not the case.

In addition, as Adam said, in the end… “It should happen. It’s just part of testing. Sometimes we learn that certain optimization changes don’t make a difference.”

WHERE TO GO FROM HERE?

So, if you find yourself in a similar place in your testing process, what should you do next? I asked Adam, and here’s what he had to say…

- Try another radical approach to confirm your assumption.

- Segment your list. You may find that, in reality, only a small fraction of your audience is the cause of the response you’re seeing, and while your two treatments seem radically different, they’re really taking the same approach.So, segment your list. Send your most loyal customers (chosen by purchase or CTR history or whatever metric matters to you) the standard run-of-the-mill email. But test different value propositions to that next tier of your list to see what will really get them to act.

In hindsight, bullet two may likely have explained our test results here. We really didn’t discuss the value proposition of the Optimization Summit itself. Since we’ve been talking about it for the past month or so, we just assumed our audience knew all about its value. More likely, only our most loyal email subscribers really knew enough to be motivated to click without a real presentation of the Summit’s value proposition.

“You don’t need to optimize or design an email or Web page for the 5% of people that are highly motivated and want to buy your product,” Adam said. “You have to optimize for the 95% that are just checking you out and sizing you up. The 5% of people will buy no matter what.”

In the end, we may never know. It looks like the joint MarketingExperiments/ MarketingSherpa Optimization Summit will be sold out by the end of this month. If you have a ticket (and are among that upper 5%), I look forward to seeing you in Atlanta. If not, you can always buy next year. We’d be more than happy to change that to the “upper 6%” to accommodate you.

Related Resources

Optimization Summit 2011 – June 1-3, Save $500 when you buy a ticket by April 30th

Email Marketing: Testing subject lines

Email Copy: Half the words, 16% higher clickthrough rate

Interesting. I do like your conclusion. Sometimes we put together a marketing campaign & get a good customer to review it and give comments. I am not sure that provides you much value.

A thought I did have about your marketing campaign is that they were equally as good and they appealed to different groups. Maybe ‘A’ appealed to 1 segment of your list and ‘B’ appealed to another set.

Or like you said the topic is of such interest…..