Online testing is an exciting venture. While it requires a number of up-front resources and a somewhat long-term commitment, it really can transform your company’s earned media online into a living laboratory for building customer theory.

I’ve learned a few critical lessons in my journey into online testing, and this one is likely the most important.

Tests never perform as expected

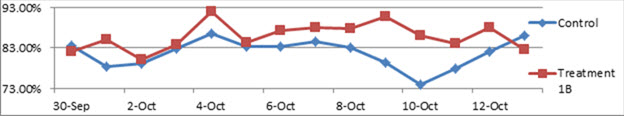

We believe as long as we get a test set up and live, that one version will be performing much better than the other, and everything will be easy to figure out. In fact, we don’t just expect this for the final test result, we expect it for the daily results, too, like this graph:

Example #1: Real daily test results from an archived test

In the graph above, Treatment 1B is mostly beating the control, both lines are generally going up and down together, and the results seem to show that Treatment 1B is likely more effective.

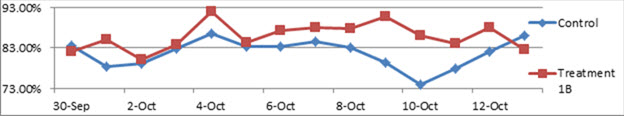

But, what do you do when you see results like in Example #2?

Example #2: Real daily test results from an archived test

The lines trend somewhat together, but crisscross. One is winning, then the other, then back again. If one is showing a win in terms of results, can you really take it seriously after seeing this graph?

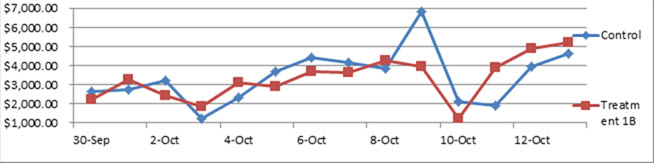

What happens if two different versions beat the control by virtually the same amount at the same time?

Example #3: Actual treatments from the same test that both significantly beat the control version by virtually the same amount of difference

I can’t recall exactly how many times this has occurred here in the laboratory, but it happens often. Tests don’t always perform the way we expect, or want them to.

You can’t rewind what’s already been played

Once you have your data and your test is closed, there’s no going back to try and get something that you need if it wasn’t already being collected.

That means if you don’t have a solid lift, chances are you won’t be able to truly distill the learning to make up for that investment of resources. Even if you have a lift, you’re not set up to understand why.

You’ve got to over-measure to truly understand

Anticipating this issue, our team set up a particular homepage test to track all the expected metrics PLUS additional channel-specific metrics (i.e., the difference in performance between referral source traffic and search-engine traffic). Keep in mind that for all three versions, we had to collect eight form fields per submission for it to be counted as a lead.

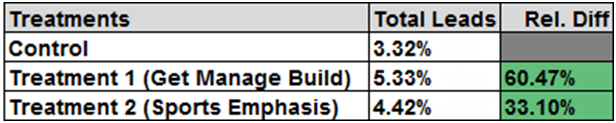

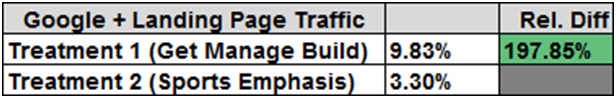

If you look closely, the only difference between Treatment #1 and #2 is in the primary messaging in the introductory area. Here are the results:

It appears Treatment #1 is the winner of choice for all traffic (both results are statistically significant). However, seeing some simultaneous positive results for Treatment #2, we decided to continue tracking the two treatments against each other, looking closer at the extra channel metrics we decided to track from the beginning. And here is what we discovered:

The reason why Treatment #1 results weren’t as high when accounting for all traffic is that it was performing sub-par with the referral traffic but extremely well for organic and landing page-based traffic. We found the same true with Treatment #2 when looking at performance for referral traffic sources.

When looking closer at how visitors were arriving from sources with sub-par performance, it made sense as Treatment #1 seemed to repeat information already understood by visitors coming in. Whereas, with Google and Landing Page Traffic, it was providing a critical introduction and understanding to the company’s offering.

Greater discoveries, gains come with more granularity of measurement

By anticipating additional discoveries through over-measuring, we were able to discover and take advantage of that larger gain by permanently setting up Treatment #1 to show to its ideal group of traffic and Treatment #2 to the referral traffic groups.

Even if we didn’t achieve the gain in this test, we set ourselves up to get a gain in the next test by collecting as many of the four metric groups as possible to better plan and build the next test.

Related Resources:

Test Plan: Build better marketing tests with the Metrics Pyramid

Marketing Analytics: 6 simple steps for interpreting your data

Online Marketing Tests: A data analyst’s view of balancing risk and reward