In online testing, there are plenty of things that can go wrong if you’re not careful.

Some of the more familiar examples that come to mind are:

- Testing during promotions

- Artificial optimization

- Validity threats from instrumentation

These are just some of the threats that can make your tests look conclusive when they are actually flawed.

Consequently, if you are a skeptic like myself, this also means a healthy amount of doubt should be built into your interpretation of data.

Doubt can be problematic at times because good marketing decisions rely on confidence in your results.

So, how can you establish more trust in your data?

A way to curb doubts is by using a testing method called double, or dual, control testing.

What is a double control?

The idea behind testing with a double control is to compare two identical pages, which should cause identical visitor behavior.

Yet, what we sometimes see is a difference likely indicating an uneven split of visitors.

If your highly motivated visitors are unevenly split among two identical pages, this will also impact the validity of your key performance indicators.

A tale of two controls

Simply adding a duplicate of the original page you are testing allows you to easily spot visitor behavior that is not influenced by the variables you are testing.

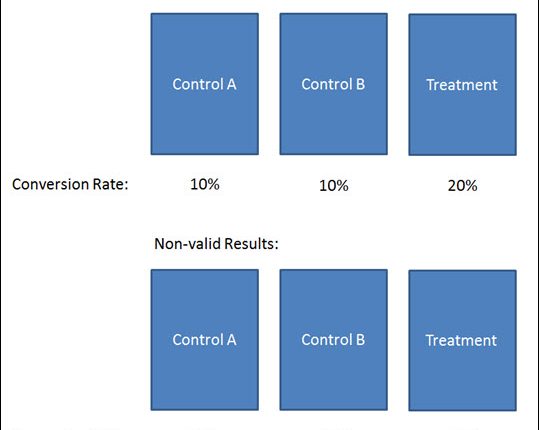

When testing with a double control, you will have one of the two outcomes:

First, the two identical control pages show no significant difference in visitor behavior.

In the case that both control versions have a similar conversion rates, or whichever metric you have selected as your KPI, you have now established reliable results and can move forward with the interpretation of your test without ever having to question the results.

The second outcome is the two identical control pages show a significant difference in visitor behavior.

If the two control pages perform significantly different in conversion rate, your results may not be valid, but you can now analyze the data and determine what caused the difference between the two identical pages.

Don’t forget about the outliers

If there’s one caveat I would offer here, it’s to remember the outliers when interpreting or diagnosing problems when using a double control approach.

They are the visitors that repeatedly return and complete the desired action you are measuring and therefore artificially drive up the conversion rate.

Make sure you bring mitigating those outliers to the table when discussing risks with your data analyst. This will help you interpret customer behavior with confidence, while satisfying your inner skeptic in the process.

Feel free to share some your experiences utilizing dual control testing in the comments below.

Related Resources:

A/B Testing: Collaborative test planning can help you avoid “I told you so” testing

Why Fear and ROI Should Never Drive Your Testing

Web Analytics: What browser use can tell you about your customers

I really like this method Ben. I’ve previously used AA testing which I guess is the same principles (i.e. identifying differences between two control groups) but your method saves time by running the AA testing alongside the AB testing. I like it!

Can AA testing anyhow be used to measure the confidence level (or statistical significance) of my experiments?