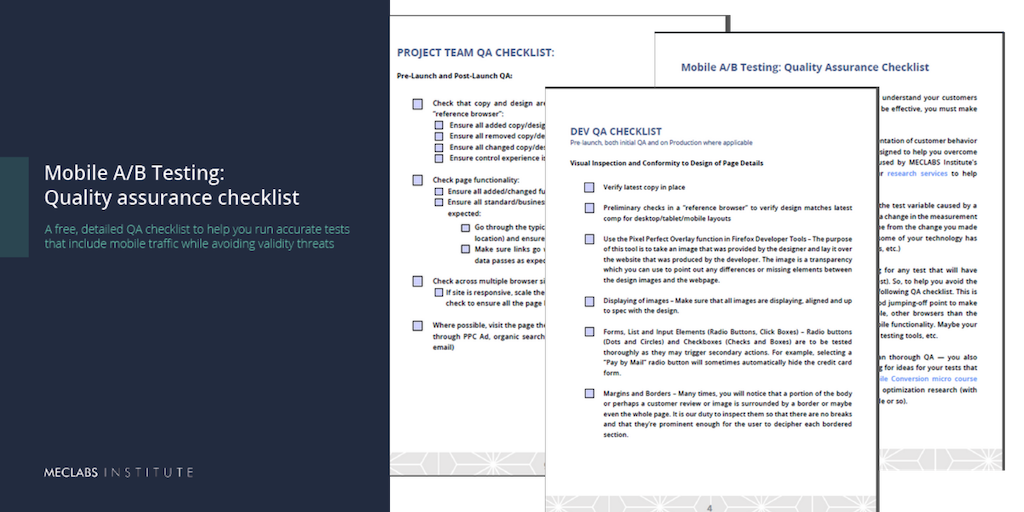

Mobile A/B Testing: Quality assurance checklist

A free, detailed QA checklist to help you run accurate tests that include mobile traffic while avoiding validity threats

Real-world behavioral tests are an effective way to better understand your customers and optimize your conversion rates. But for this testing to be effective, you must make sure it is accurately measuring customer behavior.

One reason these A/B split tests fail to give a correct representation of customer behavior is because of validity threats. This series of checklists is designed to help you overcome Instrumentation Effect. It is based on actual processes used by MECLABS Institute’s designers, developers and analysts when conducting our research services to help companies improve marketing performance.

MECLABS defines Instrumentation Effect as “the effect on the test variable caused by a variable external to an experiment, which is associated with a change in the measurement instrument.” In other words, the results you see do not come from the change you made (say, a different headline or layout), but rather, because some of your technology has affected the results (slowed load time, miscounted analytics, etc.)

Avoiding Instrumentation Effect is even more challenging for any test that will have traffic from mobile devices (which today is almost every test). So, to help you avoid the Instrumentation Effect validity threat, we’re providing the following QA checklist. This is not meant for you to follow verbatim, but to serve as a good jumping-off point to make sure your mobile tests are technically sound. For example, other browsers than the ones listed here may be more important for your site’s mobile functionality. Maybe your landing page doesn’t have a form, or you may use different testing tools, etc.

Of course, effective mobile tests require much more than thorough QA — you also must know what to test to improve results. If you’re looking for ideas for your tests that include mobile traffic, you can register for the free Mobile Conversion micro course from MECLABS Institute based on 25 years of conversion optimization research (with increasing emphases on mobile traffic in the last half decade or so).

There’s a lot of information here, and different people will want to save this checklist in different ways. You can scroll through the article you’re on to see the key steps of the checklist. Or use the form on this page to download a PDF of the checklist.

Roles Defined

The following checklists are broken out by teams serving specific roles in the overall mobile development and A/B testing process. The checklists are designed to help cross-functional teams, with the benefit being that multiple people in multiple roles bring their own viewpoint and expertise to the project and evaluate whether the mobile landing page and A/B testing are functioning properly before launch and once it is live.

For this reason, if you have people serving multiple roles (or you’re a solopreneur and do all the work yourself), these checklists may be repetitive for you.

Here is a quick look at each team’s overall function in the mobile landing page testing process, along with the unique value it brings to QA:

Dev Team – These are the people who build your software and websites, which could include both front-end development and back-end development. They use web development skills to create websites, landing pages and web applications.

For many companies, quality assurance (QA) would fall in this department as well, with the QA team completing technical and web testing. While a technical QA person is an important member of the team for ensuring you run valid mobile tests, we have included other functional areas in this QA checklist because different viewpoints from different departments will help decrease the likelihood of error. Each department has its own unique expertise and is more likely to notice specific types of errors.

Value in QA: The developers and technological people are most likely to notice any errors in the code or scripts and make sure that the code is compatible with all necessary devices.

Project Team – Depending on the size of the organization, this may be a dedicated project management team, a single IT or business project manager, or a passionate marketing manager keeping track of and pushing to get everything done.

It is the person or team in your organization that coordinates work and manages timelines across multiple teams, ensures project work is progressing as planned and that project objectives are being met.

Value in QA: In addition to making sure the QA doesn’t take the project off track and threaten the launch dates of the mobile landing page test, the project team are the people most likely to notice when business requirements are not being met.

Data Team – The data scientist(s), analyst(s) or statistician(s) helped establish the measure of success (KPI – key performance indicator) and will monitor the results for the test. They will segment and gather the data in the analytics platform and assemble the report explaining the test results after they have been analyzed and interpreted.

Value in QA: They are the people most likely to notice any tracking issues from the mobile landing page not reporting events and results correctly to the analytics platform.

Design Team – The data scientist(s), analyst(s) or statistician(s) helped establish the measure of success (KPI – key performance indicator) and will monitor the results for the test. They will segment and gather the data in the analytics platform and assemble the report explaining the test results after they have been analyzed and interpreted.

Value in QA: They are the people most likely to notice any tracking issues from the mobile landing page not reporting events and results correctly to the analytics platform.

DEV QA CHECKLIST

Pre-launch, both initial QA and on Production where applicable

Visual Inspection and Conformity to Design of Page Details

- Verify latest copy in place

- Preliminary checks in a “reference browser” to verify design matches latest comp for desktop/tablet/mobile layouts

- Use the Pixel Perfect Overlay function in Firefox Developer Tools – The purpose of this tool is to take an image that was provided by the designer and lay it over the website that was produced by the developer. The image is a transparency which you can use to point out any differences or missing elements between the design images and the webpage.

- Displaying of images – Make sure that all images are displaying, aligned and up to spec with the design.

- Forms, List and Input Elements (Radio Buttons, Click Boxes) – Radio buttons (Dots and Circles) and Checkboxes (Checks and Boxes) are to be tested thoroughly as they may trigger secondary actions. For example, selecting a “Pay by Mail” radio button will sometimes automatically hide the credit card form.

- Margins and Borders – Many times, you will notice that a portion of the body or perhaps a customer review or image is surrounded by a border or maybe even the whole page. It is our duty to inspect them so that there are no breaks and that they’re prominent enough for the user to decipher each bordered section.

- Copy accuracy – Consistency between typography, capitalization, punctuation, quotations, hyphens, dashes, etc. The copy noted in the webpage should match any documents provided pertaining to copy and text unless otherwise noted or verified by the project manager/project sponsor.

- Font styling (Font Color, Format, Style and Size) – To ensure consistency with design, make sure to apply the basic rules of hierarchy for headers across different text modules such as titles, headers, body paragraphs and legal copies.

- Link(s) (Color, Underline, Clickable)

Web Page Functionality: Verify all page functionality works as expected (ensure treatment changes didn’t impact page functionality)

- Top navigation functionality – Top menu, side menu, breadcrumb, anchor(s)

- Links and redirects are correct

- Media – Video, images, slideshow, PDF, audio

- Form input elements – drop down, text fields, check and radio module, fancy/modal box

- Form validation – Error notification, client-side errors, server-side errors, action upon form completion (submission confirmation), SQL injection

- Full Page Functionality – Search function, load time, JavaScript errors

- W3C Validation – CSS Validator (http://jigsaw.w3.org/css-validator/), markup validator (http://validator.w3.org/)

- Verify split functional per targeting requirements

- Verify key conversion scenario (e.g., complete a test order, send test email from email system, etc.) – If not already clear, QA lead should verify with project team how test orders should be placed

- Where possible, visit the page as a user would to ensure targeting parameters are working properly (e.g., use URL from the PPC ad or email, search result, etc.)

Tracking Metrics

- Verify tracking metrics are firing in browser, and metric names match requirements – Check de-bugger to see firing as expected

- Verify reporting within the test/analytics tool where possible – Success metrics and click tracking in Adobe Target, Google Content Experiments, Google Analytics, Optimizely, Floodlight analytics, email data collection, etc.

Back End Admin Panel

- Database includes accurate offer information

- Tracking correct order information from customer (data collection)

- Ecommerce funds are transferred to and from buyer and seller accounts – Test for different account types. For example, test in PayPal Sandbox (https://developer.paypal.com/developer/accounts/). Also, test credit cards (test numbers available at https://www.paypalobjects.com/en_AU/vhelp/paypalmanager_help/credit_card_numbers.htm)

- Validate various forms of payments are correctly charged when combined: E.g., gift card, rewards, discount code, promotional offer, credit cards, etc. (test numbers available at https://www.paypalobjects.com/en_AU/vhelp/paypalmanager_help/credit_card_numbers.htm)

- Payment Validation and Errors – Ensure the character limiter is effective for all types of credit cards (Visa & Mastercard: 16, Amex: 14)

Notify Project Team and Data Team it is ready for their QA (via email preferably) – indicate what reference browser is. After Project Team initial review, complete full cross browser/ cross device checks using “reference browser” as a guide:

Browser Functionality – Windows

- Internet Explorer 7 (IE7)

- IE8

- IE9

- IE10

- IE11

- Modern Firefox

- Modern Chrome

Browser Functionality – macOS

- Modern Safari

- Modern Chrome

- Modern Firefox8

Mobile Functionality – Tablet

- Android

- Windows

- iOS

Mobile Functionality – Mobile phone

- Android

- Windows

- iOS

Post-launch, after the test is live to the public:

- Notify Project Team & Data Team the test is live and ready for post-launch review (via email preferably)

- Verify split is open to public Verify split functional per targeting requirements

- Where possible, visit the page as a user would to ensure targeting parameters are working properly (e.g., use URL from the PPC ad or email, search result, etc.)

- Test invalid credit cards on a production environment

PROJECT TEAM QA CHECKLIST:

Pre-Launch and Post-Launch QA:

- Check that copy and design are correct for control and treatments in the “reference browser”:

- Ensure all added copy/design elements are there and correct

- Ensure all removed copy/design elements are gone

- Ensure all changed copy/design elements are correct

- Ensure control experience is as intended for the test

- Check page functionality:

- Ensure all added/changed functionality is working as expected

- Ensure all standard/business as usual – BAU_ functionality is working as expected:

- Go through the typical visitor path (even beyond the testing page/ location) and ensure everything functions as expected

- Make sure links go where supposed to, fields work as expected, data passes as expected from page to page.

- Check across multiple browser sizes (desktop, tablet, mobile)

- If site is responsive, scale the browser from full screen down to mobile and check to ensure all the page breaks look correct

- Where possible, visit the page the way a typical visitor would hit the page (e.g., through PPC Ad, organic search result, specific link/button on site, through email)

DATA QA CHECKLIST:

Pre-Launch QA Checklist (complete on Staging and Production as applicable):

- Verify all metrics listed in the experiment design are present in analytics portal

- Verify all new tracking metrics’ names match metrics’ names from tracking document

- Verify all metrics are present in control and treatment(s) (where applicable)

- Verify conversion(s) are present in control and treatment(s) (where possible)

- Verify any metrics tracked in a secondary analytics portal (where applicable)

- Immediately communicate any issues that arise to the dev lead and project team

- Notify dev lead and project team when Data QA is complete (e-mail preferably)

Post-Launch QA / First Data Pull:

- Ensure all metrics for control and treatment(s) are receiving traffic

- Ensure traffic levels are in line with the pre-test levels used for test duration estimation

- Update Test Duration Estimation if necessary

- Immediately communicate any issues that arise to the project team

- Notify dev lead and project team when first data pull is complete (e-mail preferably)

DESIGN QA CHECKLIST:

Pre-Launch Review:

- Verify intended desktop functionality (if applicable)

- Accordions

- Error states

- Fixed Elements (nav, growler, etc.)

- Form fields

- Hover states – desktop only

- Links

- Modals

- Sliders

- Verify intended tablet functionality (if applicable)

- Accordions

- Error states

- Fixed Elements (nav, growler, etc.)

- Form fields

- Gestures – touch device only

- Links

- Modals

- Responsive navigation

- Sliders

- Verify intended mobile functionality (if applicable)

- Accordions

- Error states

- Fixed Elements (nav, growler, etc.)

- Form fields

- Gestures – touch device only

- Links

- Modals

- Responsive navigation

- Sliders

- Verify layout, spacing and flow of elements

- Padding/Margin

- “In-between” breakpoint layouts (as these are not visible in the comps)

- Any “of note” screen sizes that may affect test goals (For example: small laptop 1366×768 pixels, 620px of height visibility)

- Verify imagery accuracy, sizing and placement

- Images (Usually slices Design provided to Dev)

- Icons (Could be image, svg or font)

- Verify Typography

- Color

- Font-size

- Font-weight

- Font-family

- Line-height

Qualifying questions, if discrepancies are found:

- Is there an extremely strict adherence to brand standards?

- Does it impact the hierarchy of the page information?

- Does it appear broken/less credible?

- Immediately communicate any issues that arise to the dev lead and project team

- Notify dev lead and project team when data QA is complete (e-mail preferably)

To download a free PDF of this checklist, simply complete the below form.

___________________________________________________________________________________

Increase Your Mobile Conversion Rates: New micro course

Hopefully, this Mobile QA Checklist helps your team successfully launch tests that have mobile traffic. But you still may be left with the question — what should I test to increase conversion?

MECLABS Institute has created five micro classes (each under 12 minutes) based on 25 years of research to help you maximize the impact of your messages in a mobile environment.

In the complimentary Mobile Conversion Micro Course, you will learn:

In the complimentary Mobile Conversion Micro Course, you will learn:

- The 4 most important elements to consider when optimizing mobile messaging

- How a large telecom company increased subscriptions in a mobile cart by 16%

- How the same change in desktop and mobile environments had opposing effects on conversion

Great article with great points. Quality Assurance is an essential step in any production environment. Website quality assurance is a proactive activity to ensure the health of your experimentation program. With the proliferation of A/B testing has come an era of experimentation. The points that has been underlined in the given article are very fruitful and informative. I have had a great time reading this amazing post and would definitely like to read more such knowledgeable articles from your end.