Let’s face it …

Marketing strategies often have a tendency of being driven by the HIPPO (highest paid person’s opinion), whether that is the CEO, CMO, director of marketing or the creative leader of an agency.

Those strategies are usually byproducts of all the reputation, ego and personal preferences that go along with an individual approach to your test planning.

A key problem with that, however, is the customer is an afterthought or never considered at all.

MECLABS is in the business of either proving or debunking those preferences of the individual marketer (or HIPPO) by using an evidence-based approach to marketing. We attempt to allow customer behavior determine the best approach through testing and optimization.

In the past, we’ve even had some Research Partners try and drive a testing strategy through those same preferences and in general, the approach fails miserably and leads to inconclusive results at best.

To me, that is worse than an abject failure.

There’s strength (and great test ideas) in numbers

So, what truly makes us any different?

Well, a key ingredient in our secret sauce is … collaboration!

One of our greatest strengths is the preference to approach testing collaboratively instead of individually.

Now, I won’t say we don’t have strong individual personalities, because we do. I certainly do and when you talk with any of our staff, we are very willing to share our thoughts on why one of our ideas should be the forerunner for your next test.

But … until that idea is actually tested, our staff will all agree that any test idea is fair game.

All roads should lead to customer-centric marketing

A collaborative approach to test design may sound like it has the potential to be unorganized or even chaotic, and at times, it can be.

So, how do you manage the masses?

You do so by having a group of researchers seeking out as many alternative ideas as possible to help Research Partners answer a key question in their marketing:

“If I am your ideal prospect, why should I buy from you, as opposed to any of your competitors?”

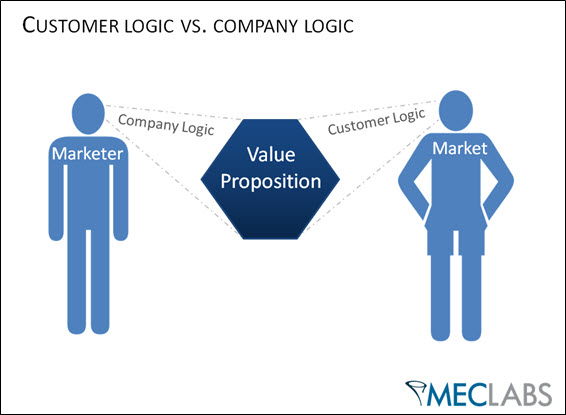

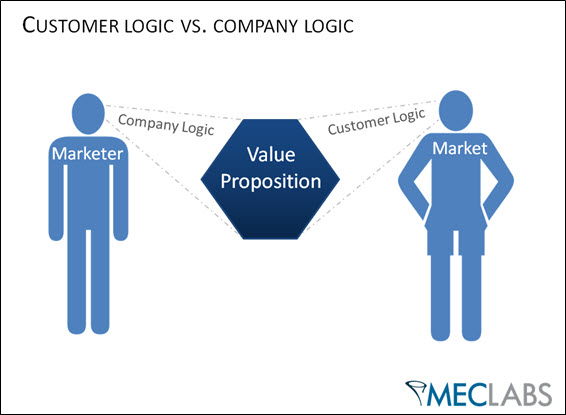

Effective value proposition development requires us to step out of our perceived need into the perceived need of the prospect.

So, this is where the primary disconnect between company and customer exists in marketing that uses the HIPPO’s approach.

This is simply because very rarely is the HIPPO in your company the primary prospect.

Ideally, you want everyone’s point of view. In our Peer Review Sessions (PRS), we value the ideas ranging from our newest analysts’ to some of out our most seasoned researchers.

It is a dynamic process that values the diversity of ideas that are produced.

Bringing it all together

Here are a couple of quick points to help you get the most value out of your teams when planning tests collaboratively:

1. Get rid of any preconceived notions

I mentioned this earlier, but I can’t say it enough. You want to pool as many ideas as possible from as many diverse sources as possible. The best way to do this is by emphasizing that every idea is fair game for consideration in testing.

2. Help your core team commit to a specific customer theory hypothesis

The gathering of ideas is one thing, but a testable hypothesis that translates into expanding a Research Partner’s customer knowledge is another. So, while you want to leave the idea door open to everyone, remember your core test design team should focus their efforts on a specific hypothesis.

3. Design your tests to prove or disprove that hypothesis using ideas that are a best fit

Now that you have a pool of ideas and a testable hypothesis, I recommend you cull through your ideas until you have selected test ideas from your pool that help you test that hypothesis.

Consider the risks and reward and beware of celebrating “I told you so” testing

One caveat to all of this is that this strategy does have an inherent risk – but there is also a reward.

If the test has a lift, our hypothesis was true. If the test doesn’t have a lift, our hypothesis was false, hence it is something learned that we take and start the process all over again.

When you want to build off the learning momentum from testing cycles, this hopefully explains why researchers tend to hate inconclusive results.

Also, there is a chance your hypothesis may be proven wrong … but personally, I prefer to be proven wrong.

A hypothesis tested that turns out to be true is only reinforcing what I already know.

There simply isn’t the optimal amount of knowledge to gain from “I told you so” test results over “why were we wrong?” results. In my testing experience, being wrong has, at times, been just the catalyst I needed to discover how to better serve customers.

It’s what our team needed to spark new ideas to push the envelope just a little further.

Being wrong helps us all reach new heights that would not have been possible any other way.

Oh, and just a few more things …

Please feel free to share some of your test planning experiences in the comments below and be sure watch for an upcoming blog post where I’ll talk a little more about how “losing” tests can sometimes really help build your customer knowledge base.

Related Resources:

Online Testing: 3 benefits of using an online testing process (plus 3 free tools)

Online Testing: 6 business lessons I’ve adapted to website optimization

Online Marketing: 3 website optimization insights I learned from baking