Back in 2003, a little blue fish taught us to, “just keep swimming.”

Much like Dory, Ryan Hutchings, Director of Marketing, VacationRoost, taught us that even when we aren’t gaining the results we want, just keep testing.

Ryan was one of the presenters at MarketingSherpa MarketingExperiments Web Optimization Summit 2014, where he discussed his marketing experience at VacationRoost — an ecommerce vacation rental wholesaler. During his session, Ryan shared how he and his marketing team were able to:

- Increase the company’s total conversion 12%

- Run more than 50 tests in a year

These results were achieved by employing simultaneous tests for large and small projects. The tests Ryan utilized ran on two separate testing methodologies and allowed VacationRoost’s small marketing team to make the most of its resources.

Because VacationRoost is an aggregation of several smaller companies, the company currently has many different websites and Web properties it has to maintain. “For a marketer, it’s ideal because I have this whole entire playground to essentially do whatever I want with,” Ryan said.

However, not every test leads to overwhelmingly positive results. So what do you do when your costly testing is met with failure?

Make sure retesting is worth the cost

No matter how many free resources are available, there is a cost to testing. Testing takes time, resources and manpower, so make sure the tests you are running are worth the effort. This warning is doubled when it comes to retesting.

For one of Ryan’s presented examples, the VacationRoost team wanted to optimize a lead generation form on the site. After trying all of the best practices for form creation and optimization, Ryan and his team still weren’t seeing a lift. It actually took four failed tests before the team saw any sort of gain. However, because of the importance of the form, the costs of testing and retesting were worth it.

“We knew that this would have a big impact if we could get a big gain on it,” Ryan said.

Focus on how the conversion sequence applies to your company, not best practices

While there is some truth in the unofficial marketing Bible of best practices, it’s important to remember these are more suggestions than guidelines.

For Ryan, testing based on best practices led to failure: “[We] shortened the form like everybody says in these conferences … you know, no.”

Even a layout change based off of a MECLABS, MarketingExperiments’ parent company, webinar failed to result in a lift.

Ryan described using “an actual MECLABS webinar that I watched [where] somebody did this layout. And I’m like, ‘Done,’ you know? No. It didn’t do it either. That failed.”

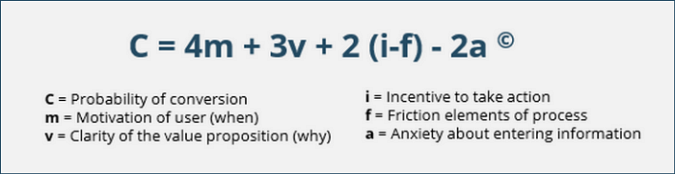

The test that finally produced results was the one that applied all elements from the MECLABS Conversion Sequence to the treatment:

According to Ryan, he had almost given up on optimizing this form. The team ran the fifth and final test, this time heavily utilizing the Conversion Sequence rather than relying on best practices or examples from other companies.

This treatment yielded a 19% lift.

Every company has a different consumer base and a different set of best practices. Because of this, “best practices” are not always best. Always test what is best for your customer, rather than assuming best practices will be successful.

Don’t confine your testing to single A/B split tests

Single factor A/B split tests can lead to some wonderful insights. The biggest appeal of these tests is that you know exactly what factor led to the test’s result — whether that result is positive or negative. While these focused tests can be incredibly informative, they aren’t right for every company or testing campaign.

“We actually don’t do a lot of really single factor tests,” Ryan said, “because, A) we don’t have the volume where I feel like it would pick up fast enough, and B) as long as we’re following that heuristic, we’ve found that every time we do something based off of a heuristic — as long as everything is based off of that — we change all kinds of elements.”

Don’t be afraid to utilize multifactorial A/B split tests. As long as your treatments are guided by the Conversion Sequence and you keep your customers’ needs in the front of your mind, your testing should lead to success. For quick reference, here’s the heuristic again with each of the factors defined:

Watch the full length video from Ryan’s presentation at MarketingSherpa MarketingExperiments Web Optimization 2014 to learn more about how to recover from and alleviate testing failure.

You can follow Kayla Cobb, Copy Editor, MECLABS, on Twitter @itskaylacobb.

You might also like

Share your marketing successes at MarketingSherpa Summit 2016 — Call for speakers is open from now until June 15

Search Engine Marketing Optimization: How simultaneous testing increased PPC clickthrough 427% for an ecommerce company [Video] [MarketingSherpa video replay]

Web Optimization: VacationRoost implements 2 testing methodologies to boost total conversion rates by 12% [MarketingSherpa case study]

Why Implementing Relevancy into Email Programs Can’t Wait [More from the blogs]